Disclaimer: This article introduces a technique developed by the author and does not constitute an explanation, interpretation or commentary on a peer-reviewed paper.

If you tell an LLM to grade an article on a scale of 1-10, you will get a different answer almost every time (looking at you, Gemini🫵).

Evaluating creative or perceptual qualities — such as how captivating an article is, how realistic an image appears, or how impactful a speech feels — has always been difficult. These qualities rely heavily on human perception, context, and emotion. Two people might read the same piece of writing and walk away with entirely different impressions, even when both are “right.” When artificial intelligence enters this space, the challenge becomes even more apparent. AI models can measure structure, grammar, or data accuracy, but they struggle when asked to assess inherently subjective traits. The same model might give inconsistent scores for creativity or engagement across different runs, simply because these qualities lack clear, objective anchors (still looking at you, Gemini🫵).

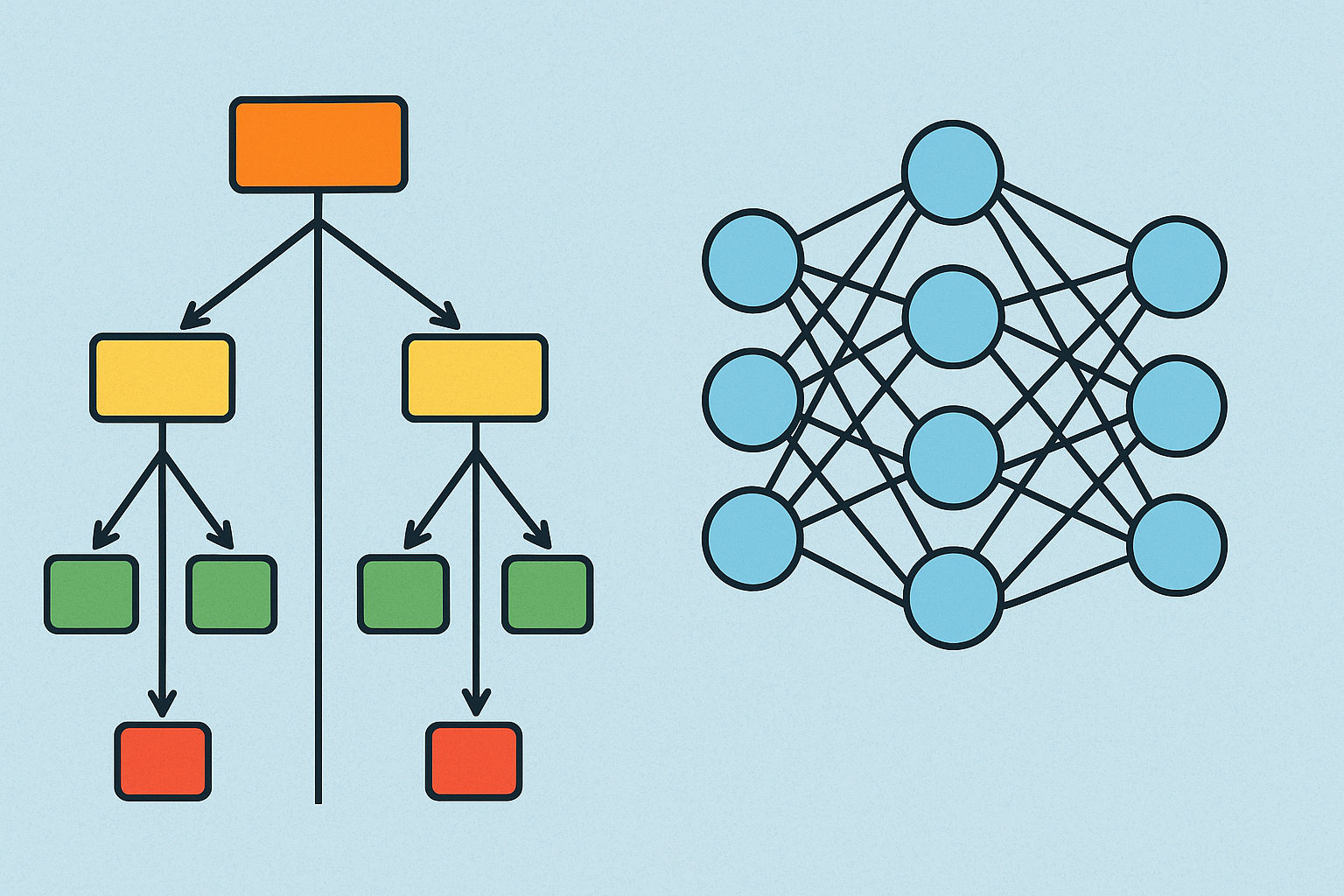

This kind of inconsistency isn’t useful. In an era where AI can be a massive scaling advantage, non-determinism becomes a real problem for automation. You can’t automate what you can’t predict, or more precisely, automating actions with unreliable outcomes is rubbish. In this article, I share a method I call Binary Path Evaluation or BPE — a way to make AI scoring more consistent by breaking subjective qualities into smaller, observable decisions. This approach isn’t for everything. It works best when the goal is to rank or compare text contents that are intented to structurally serve a purpose, like: articles, resumes, online user profiles and answers to certain kinds of questions in a conversion - more on these later.

The Idea: Applying Binary Checks on Structure

BPE is built on the insight that certain subjective qualities often arise from observable structural patterns, for instance; the reason why one answer to a question may feel better the other might be because it includes the right points at right places. So, for the case of an article, instead of asking an LLM to make vague holistic judgments such as “Is this article engaging?”, the process decomposes that abstract trait into a sequence of conditional binary informed by human guidiance.

Each check focuses on a clear, verifiable attribute that contributes to the larger quality being assessed. For instance, to check if an article is informative, we can look for structural signs that point to it. Does it introduce the topic early? Does it support its ideas with examples or data? Does it end with a clear takeaway the reader can apply? These are observable cues that reveal how much real value the article delivers. And for each high level check, we can further break it down for even deeper evaluation.

Another example: To check if an article is persuasive, we can look for structural signals that show how it builds conviction. Does it clearly state a position or claim early on? Does it provide evidence, comparisons, or reasoning that supports that claim? Does it address possible counterpoints or opposing views? And does it end with a conclusion that reinforces the main message? These are observable patterns that reveal how well the article guides a reader toward agreement — without relying on subjective impressions like “strong argument” or “compelling tone.”

Through these conditional yes/no decisions, subjective evaluations become more deterministic — not because we remove human interpretation, but because we ground it in concrete, repeatable observations. This turns qualitative grading into something that can be systematically reasoned about, both by humans and AI.

Adding Depth to Binary Evaluation with Weights

While Binary Path Evaluation relies on yes/no checks for structure and explainability, not every condition carries the same weight. Some are core anchors — essential to meaning (you know, they hold more … stuff to them) — while others are supportive or bonus checks that refine quality. Weighted scoring allows each binary path to contribute in proportion to its importance, again, as determine by a human experts.

An arbitrary example:

1. Does the answer mention {Key Point A}?

If yes → Does it include the correct explanation about {Key Point A}?

If yes → Full credit (+0.3).

If no → Partial credit (+0.15).

If no → Does it reference a closely related idea?

If yes → Open a new evaluation path to confirm logical relation (+0.1).

If no → Mark as missing (+0).

2. Does the answer mention {Key Point B}?

If yes → Does it connect {Key Point B} to {Key Point A} or the main question? (+0.25).

If yes → Does it show reasoning or cause-effect? (+0.1 bonus).

If no → Does it imply {Key Point B} indirectly (e.g., via example or analogy)? (+0.1).

These conditional checks form a branching decision graph — each yes or no guiding the next step. Some branches are optional, rewarding deeper insight without penalizing absence (this is very important).

Though grading a single answer may seem detailed, this structure ensures every outcome is explainable, adaptable, and scalable. Rules can evolve without breaking the system, and results remain consistent — a critical advantage when automating evaluation at scale.

Example: Grading an Answer to an Interview Question

Let’s take a practical example that may come up in a real-life scenario - evaluating interview answers. Suppose we want to grade an answer to this open-ended question:

Question:

Explain how asynchronous I/O makes Node.js suitable for scalable web services.

To evaluate this, we define a set of objective, observable checks — structural and factual points that together indicate understanding.

Step 1: Identify key evaluation points: This is us just highlighting the key ideas we are looking for in this single answer.

-

The answer must define asynchronous I/O as non-blocking operations allowing multiple tasks to run concurrently.

-

It must mention Node.js’s event-driven, non-blocking I/O model.

-

It should explain that this design lets Node handle many connections using a single thread.

-

It must explain why asynchronous I/O improves scalability, such as:

-

Threads aren’t blocked waiting for I/O.

-

Efficient CPU utilization.

-

Lower memory overhead compared to multithreading.

-

-

It must connect the idea to web services by referencing:

-

Handling many concurrent users.

-

Responding efficiently to multiple HTTP requests.

-

Supporting real-time features (optional bonus).

-

Optionally, it may include a comparison or example contrasting blocking vs. non-blocking models.

Step 2: Applying Binary Path Evaluation Each point becomes a binary condition, with nested paths and weighted significance.

-

Does the answer define asynchronous I/O? (weight: 0.25)

-

If yes → Does it mention non-blocking operations? (+0.15)

- If yes → Does it state that tasks run concurrently? (+0.1)

-

If no → Does it indirectly reference concurrent operations without saying “asynchronous”? (+0.1 partial)

-

-

Does it mention that Node.js uses an event-driven, non-blocking I/O model? (weight: 0.2)

-

If yes → Does it explain that this allows multiple connections on a single thread? (+0.1)

-

If no → Does it describe the same concept indirectly (e.g., “Node handles many users at once”)? (+0.05 partial)

-

-

Does it explain why asynchronous I/O improves scalability? (weight: 0.25)

-

If yes → Check for:

-

Threads not blocked by I/O (+0.1)

-

Efficient CPU usage (+0.05)

-

Lower memory overhead (+0.05)

-

-

If no → Does it imply scalability benefits without examples? (+0.05 partial)

-

-

Does it connect the concept to web services? (weight: 0.2)

-

If yes →

-

Mentions handling many concurrent users (+0.05)

-

Responding to multiple HTTP requests (+0.05)

-

Mentions real-time features (+0.05 bonus)

-

-

If no → Check if scalability is mentioned abstractly but not tied to “web services” (+0.05 partial)

-

-

Optional:

- Includes comparison or example (e.g., blocking vs. non-blocking model) (+0.1 bonus)

Step 3: Result

Each binary path contributes to a total score based on weighted outcomes. The result is fully explainable — you can see exactly which conditions were met, missed, or partially fulfilled.

This structure also supports adjustability: if future graders decide that including an example deserves more weight, that single rule can be changed without rewriting the entire evaluation flow.

Here’s how a simplified version of the evaluation logic might look:

[Start]

├── Q1: Defines async I/O?

│ ├── Yes → Mentions non-blocking?

│ │ ├── Yes → Mentions concurrency? (+0.25)

│ │ └── No → (+0.15)

│ └── No → References concurrency indirectly? (+0.1)

│

├── Q2: Mentions Node.js non-blocking model?

│ ├── Yes → Explains single thread handling? (+0.2)

│ └── No → Implies same concept indirectly? (+0.05)

│

├── Q3: Explains scalability improvement?

│ ├── Yes

│ │ ├── Threads not blocked? (+0.1)

│ │ ├── Efficient CPU? (+0.05)

│ │ └── Low memory overhead? (+0.05)

│ └── No → Implied benefit only? (+0.05)

│

├── Q4: Connects to web services?

│ ├── Yes

│ │ ├── Mentions concurrent users? (+0.05)

│ │ ├── Multiple requests? (+0.05)

│ │ └── Real-time feature? (+0.05 bonus)

│ └── No → Abstract mention? (+0.05)

│

└── Q5: Includes comparison/example? (+0.1 bonus)

So, even though evaluating one answer may seem deep, this structure guarantees consistency, clarity, and scalability — perfect for automating grading systems where reasoning transparency matters.

Limitations

While Binary Path Evaluation introduces structure and consistency into subjective grading, it is not without its limitations. The most fundamental challenge lies in defining what counts as an observable feature in the first place.

Every evaluation system depends on the quality of its questions — and here, the choice of what to observe determines the entire outcome. If evaluators disagree on which structural signals truly represent a concept like clarity or flow, their binary paths will differ, leading to variation in results even under a deterministic framework.

Another limitation arises from incompleteness. It is rarely possible to identify every relevant observable pattern at once. Early versions of an evaluation model may omit certain features that later turn out to be significant. When those new checks are added, they can shift previous scores, creating a subtle form of non-determinism over time. The framework remains logical, but its definition of “completeness” evolves — meaning consistency depends on how stable the observation set is. Additonally, there is also the question of how deep an evaluation path needs to go to be considered exhaustive – indeed, when evaluating subjective qualities, it is outright impossible to construct an exhaustive evaluation chain.

A third limitation is that not all text content types can be meaningfully decomposed. Some works — such as poetry or experimental writing — intentionally reject clear structural logic. Their value often lies in disruption, ambiguity, or emotional resonance, qualities that resist being represented as discrete, observable conditions. For example, a poem that relies on rhythm and suggestion rather than linear structure may fail many binary checks, yet still achieve a profound effect.

In essence, BPE is a useful step towards reliably automating certain grading exercises that involve subjective qualities, and in an era of agentic automation, it presents a huge opportunity for scaling such operations. In another post, I shall discuss how you can attain a high level of accuracy and reliability while also maintaining fairness when applying BPE in delicate cases.